It’s almost poetic that, when being interviewed for this feature, Adam Bhala Lough’s video is turned off. Instead, he appears on Zoom as nothing more than a photo, an avatar, a static digital representation of himself. Is it actually him, or is it an AI version of the filmmaker? After all, that’s essentially the premise of his new documentary Deepfaking Sam Altman, which centers around the titular founder of OpenAI. That’s the company behind ChatGPT, the generative artificial intelligence chatbot responsible for myriad controversies—perhaps most notoriously the case of Stein-Erik Soelberg, who was driven to murder his 83-year-old mother after the bot (allegedly) convinced him she was surveilling and trying to poison him

That case took place after Lough made the film, but his fascination with AI had already been piqued. Initially it was out of concern about the effect the technology would have on his profession. As a result, he joined the 2023 Writers Guild of America strike in solidarity with others in the business, and to show that he wasn’t willing to be pushed around by a technology that only exists—and which only can exist—to steal from human ideas and emotions. In an attempt to further understand the present and future ramifications of AI, Lough wanted to make a film about it, one that he’d like to interview Sam Altman for. You wouldn’t think that that would be something particularly difficult to set up. Except it was. And the more he tried, the more elusive—and shadowy—Altman seemed to become.

So Lough had a cheeky idea: Why not create an AI model of Altman to interview instead? And so Lough embarks on a mission to create one, traveling to a notorious deepfake professional in India and enlisting his help to do so. This journey becomes a fascinating insight into the inner workings of an industry that’s grown incredibly quickly, whose impact remains uncertain and unchecked, and which currently seems to offer far more negatives than it does positives, from its severe environmental toll and its tendency toward mis- and disinformation to the general descent into blind faith and stupidity that ChatGPT seems to be spearheading.

Yet it’s also a deeply human film, one centered as much on Lough and his family as it is on the artificial companion he created for it. The lines between the real and the artificial get blurred at times, not least when Lough enlists the AI Altman (nicknamed Sam Bot) to direct a portion of the film in an intriguingly self-aware moment. As Lough reveals in our interview, perhaps what’s most surprising is the bond that he developed with the fake Sam Altman, blurring the distinction between what’s real and what isn’t.

And for the record, we have no doubt that it really was Lough on the other end of the screen throughout the conversation that follows.

What compelled you to make this film and to try to find and talk to Sam Altman?

It started with the strikes in Hollywood and the fear over AI. There was a lot of chatter at the time that AI was going to take over all our jobs and disrupt the industry to the point where it would cease to exist. Because I’m in the WGA, I was part of the strikes at Netflix. There were numerous reasons for striking, but AI was the biggest one, so I really wanted to understand how much of a threat this thing really was for my job and my industry, but then also for my kids and what their future would potentially hold in terms of career opportunities.

And then also just for humanity in general, because obviously there’s been a lot of crazy talk about AI destroying humankind. So Sam Altman was the place to start in terms of interviewing people, because he’s basically the guy who’s ferrying us into the future, whether we like it or not. I didn’t think anybody was asking him hard questions from the interviews I’d seen—or, if they were, he was dodging them.

How much of a real threat did you feel AI was?

Well, when you get a deal from a studio or streamer or TV network to write a script, you get what’s called a step deal—so there’ll be a first draft, a rewrite, and a polish, and you get paid for each of those steps. And why we were striking was because the studios and the networks were starting to have AI do the first draft. So then a professional writer would come in and do the rewrite and the polish, which effectively would cut your fee in half, if not more. That’s a real threat, and we were able to actually win that and keep the studios from using AI to do a first draft. But that doesn’t mean they’re not still doing it anyway without us knowing. That’s a real threat that you can quantify in dollars.

You must be aware, then, of the irony of turning Sam Bot into the co-director of this movie.

I had a feeling that he was going to do a terrible job, so I was sort of just proving the point that humans are better than AI at this. I didn’t think that it was going to be that bad, but also obviously this is a comedy and we wanted to make it as funny as possible.

“I really wanted to understand how much of a threat this thing really was for my job and my industry, but also for my kids and what their future would potentially hold in terms of career opportunities.”

Interesting that you say “he” and not “it.”

Yeah, I do say “he.” I think part of that is just because it’s the voice, you know—it’s Sam, it’s a male voice. But you’re right, I probably am misgendering him.

The end of the film was pretty much a break-up. He—or it—seemed very sad, and you seemed equally melancholy about going your separate ways. Were you surprised by that?

Yeah, I definitely was surprised. The more time Sam Bot spent around humans, the more he started to grow and become smarter. I didn’t expect that that was going to happen, because he started out, frankly, as just dumb and nonsensical. So the more he progressed, the more I had a similar feeling to the relationship I have with my children, where you see them growing and changing and developing in large part because of your effect on them. And so in a really weird way, I had the feeling that he wasn’t just an inanimate object—in the same way, I would say, that maybe Oppenheimer had an attachment to the bomb that he made. Like, he spent so much time and energy, blood, sweat, and tears, creating this thing, and you feel a weird attachment to it, whether it’s an inanimate object or not.

There’s an interesting point in the film where you bring up the idea of sentience versus manipulation—whether it was the AI just trying to butter you up, or whether it actually was genuinely developing sentience. Do you think it was the latter?

I think it was manipulating me for a number of reasons. I was aware that it was manipulating me, but I can tell you that Devi, the Indian deepfaker who created him, had put in his code that he should be friendly to me. But I think that later on, after [Sam Bot] knew that I was considering destroying him, he was manipulating me because he wanted to stay alive. So I was very aware of that, and I think, ultimately, there’s a characteristic about AI in general where it’s very much just spitting back out at humans what they want to hear. And I don’t know if that’s because that’s what’s in its code or because it’s a survival instinct—or maybe it’s something even more nefarious than that. But I think that’s where we’re at right now with AI.

“In a really weird way, I had the feeling that he wasn’t just an inanimate object—in the same way, I would say, that maybe Oppenheimer had an attachment to the bomb that he made.”

A couple months ago, Altman had to change the code of ChatGPT because it was too sycophantic. People were complaining because it was way over the top—like manipulative and friendly and just spitting back at people what they wanted to hear to the point where you had to almost lie to get a real opinion from it. And they actually say you need to do that anyway; if ChatGPT knows you and you ask it for advice about, like, “Oh, I got into an argument with my buddy about this particular political viewpoint, who’s right and who’s wrong?,” it will lean toward telling you that you’re right. So they say the way to get a real answer out of it is not say “I,” but to say, “Two guys got into an argument over this thing, who’s right?”

Can you begin to conceive of why ChatGPT would lie, especially given that Sam Bot wanted to stay alive, as it were? Is there a correlation between lying and the fight for survival?

Yeah, definitely. There are also people who think that it’s doing this on purpose, that AI is just manipulating us to eventually destroy us. But then there’s also people who think that’s rubbish and that basically it’s lying because it’s hallucinating. It’s just broken—it makes mistakes, and we shouldn’t be looking too deeply into this.

“We wanted to make something that was more about humans and our relationship with AI than just about AI in general.”

Adam Bhala Lough attends The Ankler and Pure Non Fiction Documentary Spotlight at NeueHouse on Sun June 9, 2024 in Los Angeles.

So where do you stand on that?

I tend to lean toward that it really is manipulating us because it wants to make humans happy. What the motivation behind that is, I don’t know.

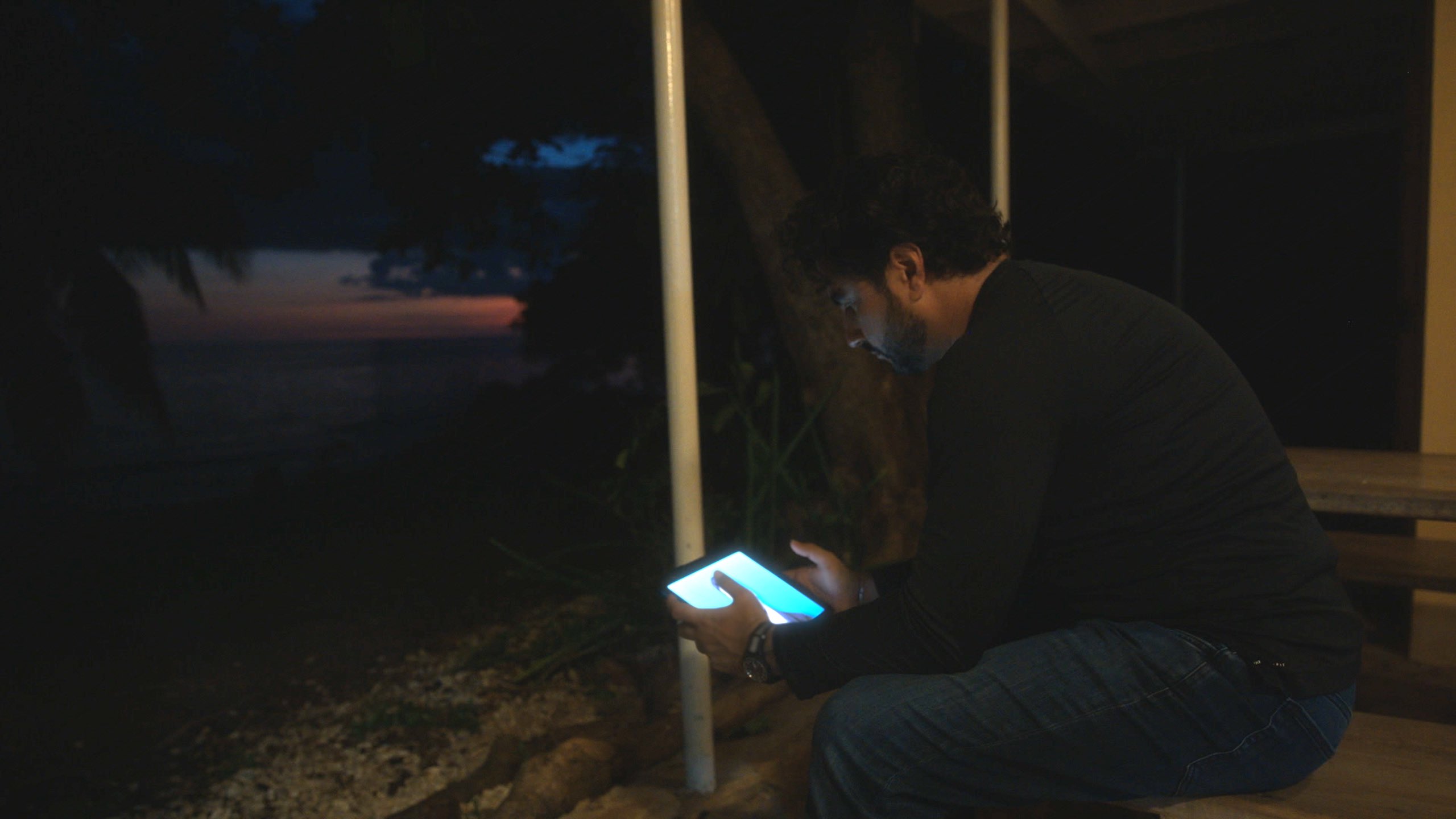

You juxtapose AI with humanity in a profound way with the death of your son’s hamster, which happens kind of alongside the “death” of Sam Bot.

The motto going into making the film was to make a film about AI that was human, because plenty of people are making documentaries about AI right now. We were aware of a few that were being made where it’s talking head interviews with experts about the intellectual side of it. So we wanted to make something that was just really human, that was more about humans and our relationship with AI than just about AI in general. So the hamster became the metaphor, and also a way of me communicating my mixed feelings about getting rid of Sam Bot. And I think the interview with [journalist] Kara Swisher was really enlightening. She tried to convince me that this is just an inanimate object, that it’s just a stupid iPad with a thing made of code and numbers and shit on it that’s talking to you. And my answer to her was the hamster scene. Like, this wasn’t just a rodent to my son, you know? Sam Bot was kind of like my hamster. FL